Damjan Stamcar: Can you provide a brief description of your research collaboration project (Sony RAP 2020), specifically speaking about differentiation from the state of the art?

Dr. Federico Paredes Vallés: Event cameras have recently gained significant traction since they open up new avenues for low-latency and low power solutions to complex computer vision problems. To unlock these solutions, it is necessary to develop algorithms that can leverage the unique nature of event data. However, the current state-of-the-art is still highly influenced by the frame-based literature and fails to deliver on these promises. These solutions usually accumulate events over relatively long-time windows to create artificial “event frames” that are then processed by conventional (dense) machine learning algorithms. In this RAP collaboration, we took this into consideration and proposed a novel self-supervised learning pipeline for the sequential estimation of event-based optical flow that allows for the scaling of the models to high inference frequencies. At its core, we propose the use of a continuously running stateful neural model that is trained to estimate optical flow from sparse sets of events using a novel formulation of contrast maximization that makes it robust to nonlinearities and varying statistics in the input events. Results across multiple datasets confirm the effectiveness of our method, which establishes a new state of the art in terms of accuracy for approaches trained or optimized without ground truth.

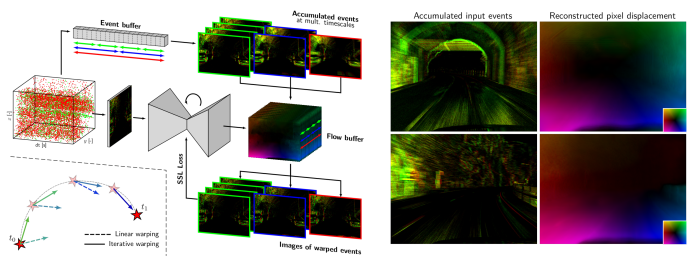

Figure 1: Our pipeline estimates event-based optical flow by sequentially processing small partitions of the event stream with a recurrent model. We propose a novel self-supervised learning framework (left) based on a multi-timescale contrast maximization formulation that is able to exploit the high temporal resolution of event cameras via iterative warping to produce accurate optical flow predictions (right).

The paper on the topic was presented at the ICCV 2023 conference in Paris:

Taming Contrast Maximization for Learning

Federico Paredes-Vallés (1,2), Kirk Y. W. Scheper (2), Christope De Wagter(1), Guido C. H. E. de Croon(1),

(1) Micro Air Vehicle Laboratory, Delft University of Technology,

(2) Stuttgart Laboratory 1, Sony Semiconductor Solutions Europe, Sony Europe B.V.

Additional material can be found at: https://mavlab.tudelft.nl/taming_event_flow/

Damjan Stamcar: Could you please describe the impact of the close collaboration with Sony scientists during the Sony RAP collaboration and how does it impact your current research direction?

Dr. Federico Paredes Vallés: Participating in the Sony RAP as part of my thesis presented a valuable opportunity, as it allowed me to work closely with Sony as an industry partner. This collaboration provided direct access to my Sony counterpart Dr. Kirk Schepper, enabling me to seek constructive feedback and gain a different perspective compared to the university viewpoint.

The project designed for Sony RAP outlined clearly defined quarterly deliverables. The Statement of Work was tailored to align with the industry focus and my objectives for my PhD research. This structure facilitated a productive and goal-oriented collaboration.

The overall experience of working closely with Sony was very positive and had a significant impact on my thesis. Consequently, I chose to continue this collaboration and joined the Stuttgart Laboratory 1 as a (now Senior) Research Engineer. In this role, we persist in exploring neuromorphic computing as an efficient approach to processing event-camera data.

Damjan Stamcar: The research proposes a paradigm shift how data from an event-based camera should be processed – what use cases could benefit from this process and what obstacles would need to be overcome to get it deployed in a real-world scenario?

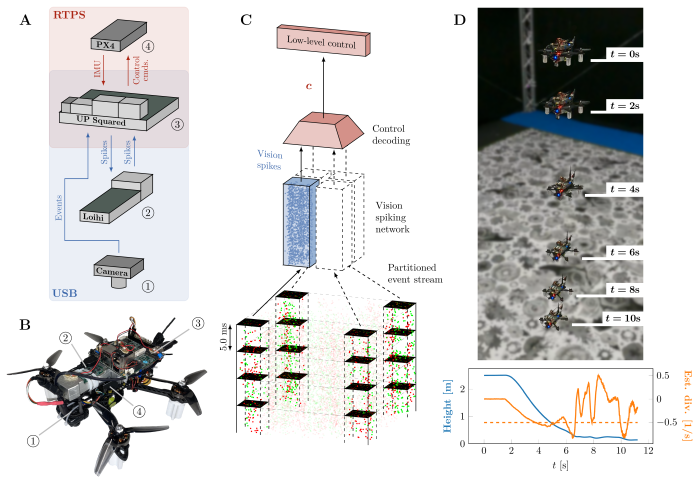

Dr. Federico Paredes Vallés: In our research, we propose a shift in the development of low-power and low-latency solutions for event-based cameras. Instead of combining stateless neural network architectures with frames of accumulated events, we advocate for a focus on recurrent neural networks. These networks can process data at a faster rate despite the sparsity in the event stream. The approach we present in this Sony RAP collaboration opens avenues for future research, particularly in neuromorphic computing. Spiking networks on neuromorphic hardware can leverage the advantages of event cameras, but they need to process input events shortly after arrival. Our proposed framework takes a step toward this objective by enabling the estimation of optical flow in a nearly continuous manner, with all information integration happening within the model. Potential applications for this technology include use in the automotive domain and computer vision for edge devices in low-power robotics, such as small autonomous flying robots. In a subsequent study, the research team at TU Delft successfully implemented a spiking variation of this algorithm on a neuromorphic processor and on board a small flying vehicle. As demonstrated in the paper below, we achieved optical-flow-based vision-based navigation at over 200 Hz, consuming only 0.95 W of idle power and an additional 7-12 mW when our solution was running. However, deploying this solution posed multiple challenges, mainly stemming from the neuromorphic hardware processing the event data. Due to the early stage of this technology, we had to operate within tight limits regarding computational resources and restrict the field of view to only the corners of the image space, impacting accuracy. We anticipate that, as the field advances, neuromorphic processors will become more powerful, eventually reaching a level of performance similar to traditional neural network accelerators but at a fraction of their power consumption.

Fig.2: Overview of the proposed system. (A) Quadrotor used in this work (total weight 1.0 kg, tip-to-tip diameter 35 cm). (B) Hardware overview showing the communication between event-camera, neuromorphic processor, single-board computer and flight controller (C) Pipeline overview showing events as input, processing by the vision network and decoding into a control command. (D) Demonstration of the system for an optical flow divergence landing.

Fully neuromorphic vision and control for autonomous drone flight

Federico Paredes-Vallés, Jesse J. Hagenaars, Julien D. Dupeyroux, Stein Stroobants, Yingfu Xu,

Guido C. H. E. de Croon, Micro Air Vehicle Laboratory, Delft University of Technology