Tomohiro Kawahara of Advanced Research Laboratory, Sony Group Corporation presented and received the award at the SI2023 (the 24th System Integration Division Conference of the Society of Instrument and Control Engineers) with “Design and development of a robot arm with distributed sensors”.

Generally, a multi-degree-of-freedom (multi-DOF) actuation system, which intervenes in the real environment as the human arm and hand, tends to require a larger amount of computation as the DOF increases. Particularly, when the situation around the actuation system greatly changes, a massive amount of computation is needed for the system to keep working while avoiding collision with other objects, making it difficult to process data in real time and, consequently, to continue with the work. As an approach to solving this problem, we proposed distributing a number of sensors over the actuation system. We have explored ways to generate motions in real time at low computation cost by utilizing sensor data.

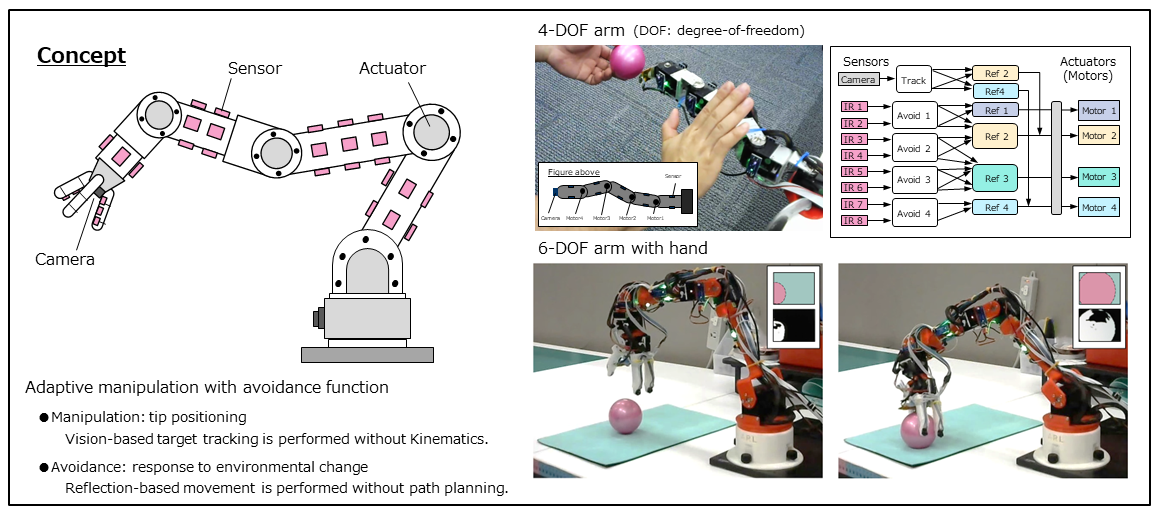

For an actuation system (for example, a robot arm-hand system) to perform a task, it is necessary to bring the tip of its hand close to the target object. When the task is performed in an ordinary environment, there is also the need to consider the possibility of the robot system colliding with other objects. It is crucially important to figure out how to meet these two needs. We have developed a new method combining two techniques. One is to use the visual guidance of a vision sensor (camera) installed on the hand for the positioning of the tip of the hand being brought close to the target object. The other is to make active use of the data from distributed proximity sensors to avoid collision with other objects during the task.

The key point in development is how to generate motion commands for the actuators in the individual joints based on the numerous sensor signals. Microorganisms and insects, for example, have superior ability to adapt to constant changes in the environment. In recent years, advances in biotechnology have revealed their movement mechanisms and signal processing paths among other things. We are exploring ways to generate motions for the entire actuation system by combining such signal processing mechanisms of living organisms with the conventional system architecture and processing integrated sensor signals in a parallel and distributed manner without the need for complex calculations.

Based on the above-mentioned points, we created a prototype horizontally driven with four degrees of freedom (4-DOF), established a guideline for design and implementation, and checked its basic motions. We also built an arm-hand system as a six-degree-of-freedom (6-DOF) actuation system, verifying expandability to multi-DOF systems and demonstrating the potential to generate adaptive motions at low computation cost. This work received the Excellent Presentation Award at the SI2023.

Going forward, we plan to keep optimizing our system design and to test the performance of the system by applying it to tasks that were particularly difficult to perform using the conventional approaches. We are doing research, taking into consideration future possible applications such as integration with machine learning. We expect to receive expert feedback on this announcement.