In a joint research project with FullDepth Co., Ltd. and Hokkaido University, the research team of Shoji Ichiki et al. of the Sony Group Corporation succeeded in creating a seagrass bed recognition map by using the underwater visual sensing technology developed by the Sony Group, with the aim of quantifying the amount of CO2 absorbed by the blue carbon ecosystem.

We gave a poster exhibit to present the research results at WSC2024 & ISBW15 (2024 World Seagrass Conference & 15th International Seagrass Biology Workshop), an international top conference on seagrass ecosystems held from June 17 through 21.

Here is an overview of the presentation.

Research overview

Mangroves and seagrass beds have recently been getting attention as sources of absorbing and fixing atmospheric CO2, one of the contributors to global warming. It is necessary to calculate the amount of blue carbon absorbed and fixed by marine ecosystems and identify its effects. In addition, preserving marine ecosystems requires monitoring the present conditions of seagrass beds and measure their biomass. The existing measurement techniques involve image analyses using earth observation satellites and aircraft including aerial drones, as well as direct measurements by human divers.

These techniques have problems, however. In some sea areas where the water depth is deep or the water is turbid, for example, measurements are difficult to make with images captured by an earth observation satellite or aircraft. Other problems include the aging professional diver population and the risk of working for long hours underwater. There is one more problem. While underwater acoustic positioning is commonly used as an alternative to the location estimation method based on the Global Navigation Satellite System (GNSS), multipath interference of sound wave can lead to inaccurate measurements in sea areas populated by tall seagrass. This hinders accurate location estimation.

To address these problems, Sony has developed a visual sensing device featuring a high-speed, high-sensitivity global shutter image sensor. Research is underway to locate the habitats of seagrass beds and measure their biomass, types, and other characteristics through location estimation and image analysis using existing underwater drones equipped with this device.

Research results

The joint research project has led to three major technological breakthroughs.

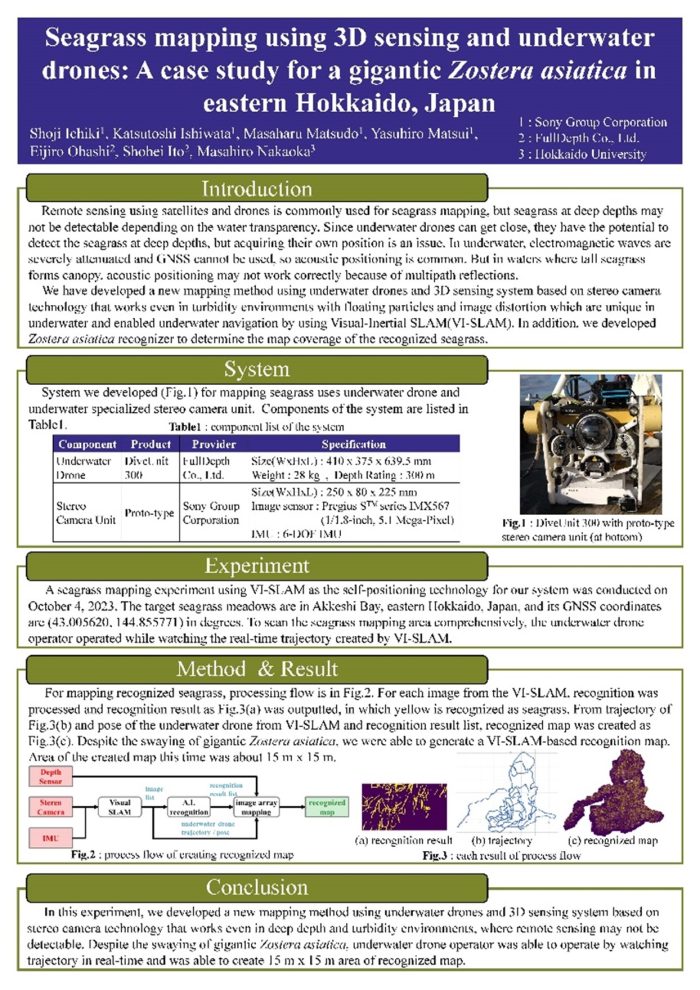

- Image sharpening technology dedicated to the underwater environment including turbidity and suspended solids

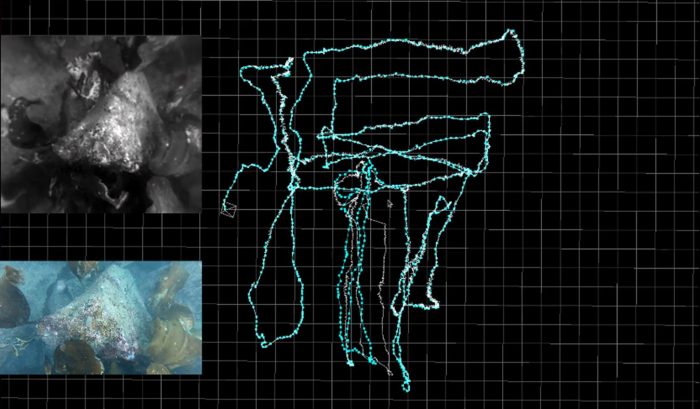

- Application of the 3D sensing technology developed for land-based uses to the underwater environment. By applying Visual-Inertial-SLAM, an autonomous location estimation technology used for autonomous mobile robots and drones, to underwater, it allows to estimate the position of an underwater drone in real time.

- AI-based seagrass/seaweed coverage recognize

We have also developed a method of creating a seagrass bed recognition map based on the images, recognition results, and location information obtained using these technologies. Experiments have shown that the underwater drone operator operating the drone while viewing the trajectory estimated by visual inertial SLAM, and able to create an all-covered seagrass bed recognition map.

Seagrass ecosystem researchers and remote sensing engineers who participated in the conference offered positive feedback on the underwater drone-based visual sensing approach and verification results.

Additional information

We are also working on the 3D modeling of the underwater environment by exploiting the above-mentioned technologies.

Here is a video showing a 3D model generated from images of coral reefs of Ishigaki Island captured by an underwater drone.

It shows how a 3D model can reproduce images in the finest detail when the image recognition and imaging technologies for capturing high-definition images are combined with the technology for drone positioning and the image processing technology for model generation.

The Sony Group is developing technologies to quantify blue carbon ecosystems and visualize the underwater environment by applying image sensor technology and image signal processing technology to underwater uses. By doing so, we contribute to addressing global environmental challenges and maritime issues.